|  |

Second Piece -- We always factor so that the number in parentheses equals $(1 + \textrm{ some }\color{red}{\textrm{fraction}})$. Since we know that the $1$ is there, the only important thing is the fraction, which we will write as a binary string.

If we need to convert from the binary value back to a base-10 value, we just multiply each digit by its place value, as in these examples:

$$0.1_{binary} = 2^{-1} = 0.5$$ $$0.01_{binary} = 2^{-2} = 0.25$$ $$0.101_{binary} = 2^{-1} + 2^{-3} = 0.625$$Third Piece -- The power of 2 that you got in the last step is simply an integer. Note, this integer may be positive or negative, depending on whether the original value was large or small, respectively. We'll need to store this exponent -- however, using the two's complement, the usual representation for signed values, makes comparisons of these values more difficult. As such, we add a constant value, called a bias, to the exponent. By biasing the exponent before it is stored, we put it within an unsigned range more suitable for comparison.

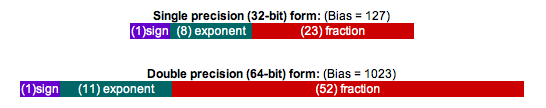

For single-precision floating-point, exponents in the range of -126 to + 127 are biased by adding 127 to get a value in the range 1 to 254 (0 and 255 have special meanings).

For double-precision, exponents in the range -1022 to +1023 are biased by adding 1023 to get a value in the range 1 to 2046 (0 and 2047 have special meanings).

The sum of the bias and the power of 2 is the exponent that actually goes into the IEEE 754 string. Remember, the exponent = power + bias. (Alternatively, the power = exponent-bias). This exponent must itself ultimately be expressed in binary form -- but given that we have a positive integer after adding the bias, this can now be done in the normal way.

By arranging the fields in this way, so that the sign bit is in the most significant bit position, the biased exponent in the middle, then the mantissa in the least significant bits -- the resulting value will actually be ordered properly for comparisons, whether it's interpreted as a floating point or integer value. This allows high speed comparisons of floating point numbers using fixed point hardware.

There are some special cases:

Zero

Sign bit = 0; biased exponent = all $0$ bits; and the fraction = all $0$ bits;

Positive and Negative Infinity

Sign bit = $0$ for positive infinity, $1$ for negative infinity; biased exponent = all $1$ bits; and the fraction = all $0$ bits;

NaN (Not-A-Number)

Sign bit = $0$ or $1$; biased exponent = all $1$ bits; and the fraction is anything but all $0$ bits. (NaN's pop up when one does an invalid operation on a floating point value, such as dividing by zero, or taking the square root of a negative number.)

Next, we write 0.085 in base-2 scientific notation

This means that we must factor it into a number in the range $(1 \le n < 2)$ and a power of 2.

Then, we write the fraction in binary form

Successive multiplications by 2 (while temporarily ignoring the unit's digit) quickly yields the binary form:

0.36 x 2 = 0.72 0.72 x 2 = 1.44 0.44 x 2 = 0.88 0.88 x 2 = 1.76 0.76 x 2 = 1.52 0.52 x 2 = 1.04 0.04 x 2 = 0.08 Once this process terminates or starts repeating, 0.08 x 2 = 0.16 we read the unit's digits from top to bottom 0.16 x 2 = 0.32 to reveal the binary form for 0.36: 0.32 x 2 = 0.64 0.64 x 2 = 1.28 0.01011100001010001111010111000... 0.28 x 2 = 0.56 0.56 x 2 = 1.12 0.12 x 2 = 0.24 0.24 x 2 = 0.48 0.48 x 2 = 0.96 0.96 x 2 = 1.92 0.92 x 2 = 1.84 0.84 x 2 = 1.68 0.68 x 2 = 1.36 0.36 x 2 = ... (at this point the list starts repeating)

As you can see, 0.36 has a a non-terminating, repeating binary form. This is very similar to how a fraction, like 5/27 has a non-terminating, repeating decimal form. (i.e., 0.185185185...)

However, single-precision format only affords us 23 bits to work with to represent the fraction part of our number. We will have to settle for an approximation, rounding things to the 23rd digit. One should be careful here -- while it doesn't happen in this example, rounding can affect more than just the last digit. This shouldn't be surprising -- consider what happens when one rounds in base 10 the value 123999.5 to the nearest integer and gets 124000. Rounding the infinite string of digits found above to just 23 digits results in the bits 0.01011100001010001111011.

(Note, we round "up" as the binary value 0.0111000... is greater than the decimal value 0.05.)

This rounding that we have to perform to get our value to fit into the number of bits afforded to us is why floating-point numbers frequently have some small degree of error when you put them in IEEE 754 format. It is very important to remember the presence of this error when using the standard Java types (float and double) for representing floating-point numbers!

Finally, we put the binary strings in the correct order.

Recall, we use 1 bit for the sign, followed by 8 bits for the exponent, and 23 bits for the fraction.

So 0.85 in IEEE 754 format is:

Next, we get the exponent and the correct bias

To get the exponent, we simply convert the binary number 10000001 back to base-10 form, yielding 129

Remember that we will have to subtract an appropriate bias from this exponent to find the power of 2 we need. Since this is a single-precision number, the bias is 127.